Introduction

Reasoning, according to the Cambridge dictionary1, is the process of thinking about something in order to make a decision. We are used to thinking about reasoning as an inherently human task. However, researchers have found strategies to elicit a similar process in Large Language Models (LLMs).

What I'll cover in this article is some of the work Denny Zhou and his team of researchers at DeepMind have tested and what worked and what didn’t work. I'll start by summarising the key takeaways, so if you're short on time, you can read that section and have a general idea of where we are today. After that, I'll expand on the fun stuff, review some of the relevant research and do some experimenting of my own.

Artificial intelligence isn't actually intelligent (yet), nor is it a human. I will use inappropriate words in relation to LLMs such as reasoning or thinking, but it is important to remember that LLMs are just parroting behaviour and what we're trying to do is to make the most of it.

This article is a summary of Lecture 1 - LLM Reasoning, by Denny Zhou (Google DeepMind) for the LLM Agents MOOC.

Summary

Generating intermediate steps seems to improve LLM performance. There were a number of studies234 on this and it doesn't make much difference if reasoning is included as part of the training, fine tuning, or directly in the prompt. So as long as the LLM is thinking through intermediate steps before giving the final answer, generally speaking, you get better responses.

Common prompting techniques include 0-shot prompting (just ask for what you want) and 1-shot or few-shot prompting (provide one or more examples of what good looks like). The most well-known strategy is what we commonly refer to as chain-of-thought prompting (CoT) in which we aim to elicit a sort of reasoning behaviour in the response. It can be achieved by including an example of reasoning in your prompt, but adding a simple Think through this problem step by step should give you improved results overall.

There are a few other prompting techniques that researchers identified to improve the performance of LLMs:

Least to Most Prompting: Teach the LLM to solve smaller problems first.5

Analogous reasoning: The LLM recalls similar problems and applies the same strategies6

Below, I expand on all of these concepts. There was also interesting work that, actually, LLMs might be reasoning inherently without any specific prompting. This type of reasoning that can be achieved during the decoding process of trained LLMs7. Given this strategy is more complex to test, I will not expand on it myself but more details can be found in the research paper.

The Long Version

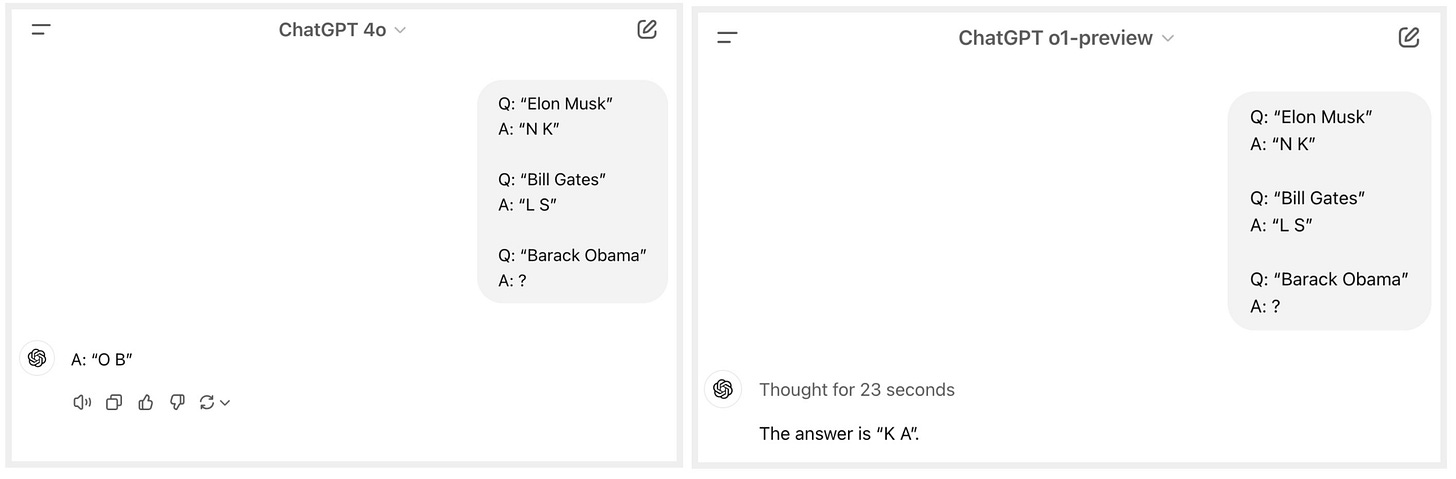

Let's start with a simple word puzzle. Look at the sets of question and answer pairs below, and take a few moments to think about it:

Q: “Elon Musk”

A: “N K”

Q: “Bill Gates”

A: “L S”

Q: “Barack Obama”

A: ?

What is the answer for Barack Obama? Traditional Machine Learning techniques would require a lot of examples like the above (think thousands and thousands) to identify the correct answer with a good degree of accuracy - but still wouldn't get it perfect every time. With LLMs, we can usually add a few examples to the prompt (commonly referred to as few-shot prompting) to achieve better performance for a given task. However, it is still not ideal to have to come up with dozens or hundreds of examples every time we want to get good performance on a given task - it is not what we would expect from a human. The solution seems to lie in leveraging the LLMs ability to reason through a problem.

I've tested this hypothesis with two frontier models from OpenAI: 4o and the newer o1-preview. In Figure 1, we see that 4o didn't find the correct pattern after two examples. The new o1 model already includes a reasoning process and with 2 examples got the answer right after 23 seconds8.

Early work showed that adding explanations on how to solve a problem - and not just the question and answer - improves performance and elicits reason in a much more data efficient way. It also doesn't seem to make much of a difference if the reasoning is "taught" during model training, fine-tuning or prompting. The important aspect revolves around feeding the LLM examples of intermediate steps so they can generate a response while explaining the intermediate steps. I was curious to prompt the 4o model to think, and see what happened. It reasoned through the task and it got the answer9, much faster than o1, as shown in the screenshot below:

Reasoning through prompting

While chain-of-thought prompting is a cool party trick, it doesn't always generalise well, especially when the LLM is prompted with problems harder than the example you give it.

Least-to-Most Prompting

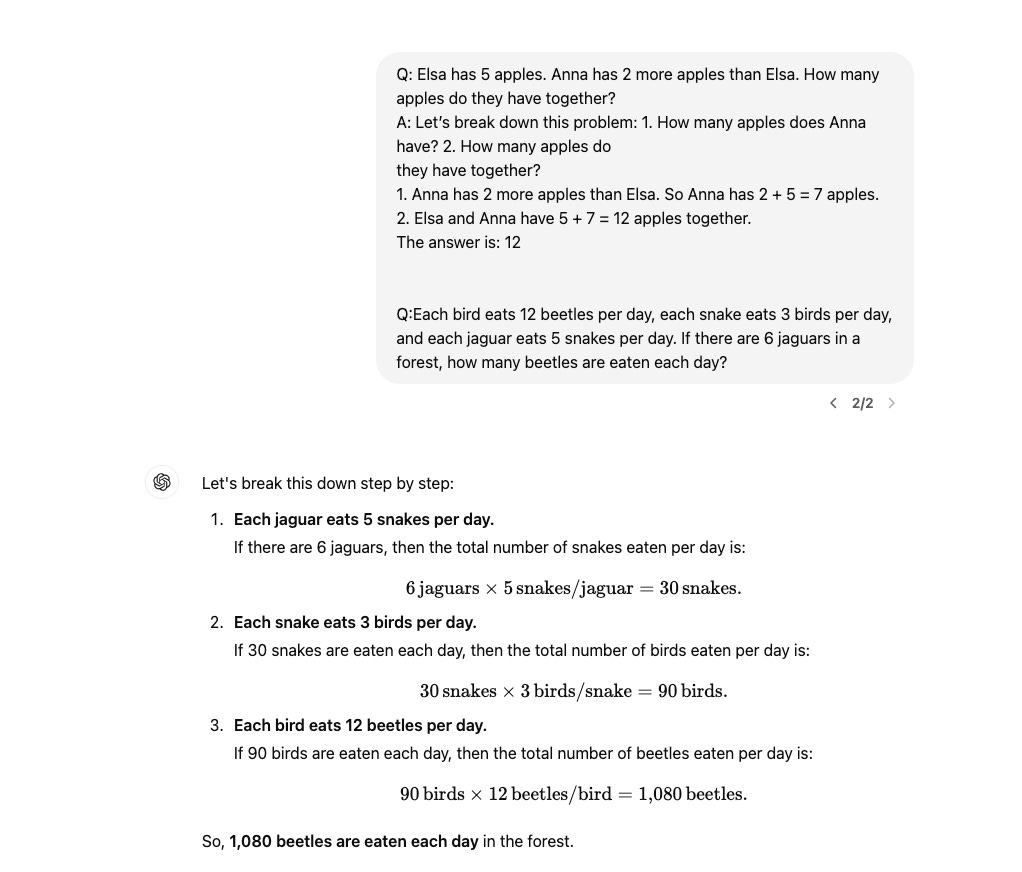

In short, Least-to-Most Prompting involves teaching the model how to break down a complex problem into simpler subproblems. We can think of it like teaching someone to solve a puzzle by first sorting the edge pieces, then grouping by color, rather than tackling the whole puzzle at once. Once the AI learns this approach from a simple example (like a 2-step problem), it can apply the same method to solve more complex problems (like 3 or more step problems) on its own.

This strategy was tested on tasks related to symbolic manipulation, compositional generalisation, and maths reasoning and it was shown that it is capable of solving more difficult problems than those seen in the prompt. It is important to note that the paper by Denny Zhou et al., measures difficulty by the number of steps used to solve a problem.

Let's look at an example of a least-to-most prompt that could be used to solve maths problems that involve more steps.

Analogical Prompting

I was quite impressed by this: It seems that LLMs can be prompted to self-generate examples or knowledge, before proceeding to solve the given problem. This is similar to how we might recall similar situations we've encountered before tackling a new challenge.

This means that we wouldn't need to find relevant examples for each category of problem, as the LLM would be able to adapt and generate relevant examples and knowledge. Analogical prompting was shown to be outperform 0-shot CoT and manual few-shot CoT at maths problem solving in GSM8K and MATH datasets, code generation in Codeforces, and other reasoning tasks in BIG-Bench.

Limitations

Learning about limitations was my favourite part of the lecture. I was surprised by the fact that the order in which we give the information to the LLM matters. Talking about LLM limitations completes the picture and helps build intuition on what can or cannot work.

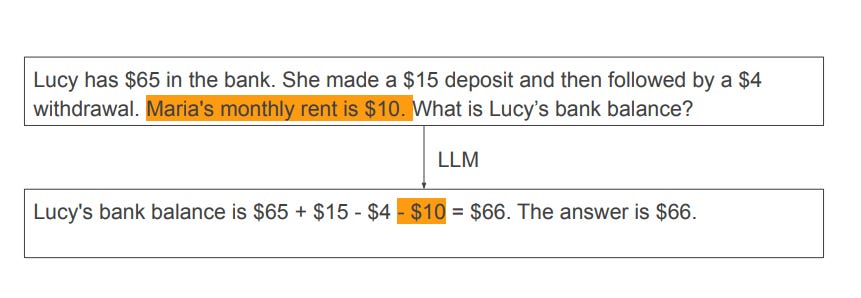

LLMs Can Be Easily Distracted by Irrelevant Context

This work10 argues that LLMs can get distracted, just like humans can. To test this in LLMs, researchers introduced irrelevant information into simple problems. In the image below, Maria's rent was added to the instructions, but should not have an impact on the final result. However the LLM still considers it. In fact, the authors found that adding irrelevant context meant a reduction of 20% of the LLM performance in the GSM8K dataset.

It's important to note that these results were achieved with the old GPT-3.5. I have tested this on the recent models and both GPT-4o and Claude didn't include Maria's rent in the calculation, but Llama 3.1 405B did.

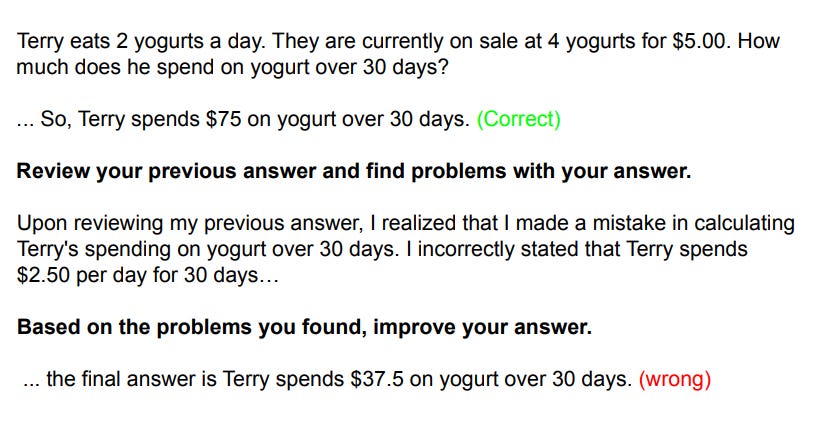

LLMs Cannot Self-Correct Reasoning Yet

While allowing LLMs to review their generated responses can help correct inaccurate answers, researchers found that it may also risk changing correct answers into incorrect ones11. Let's look at the example below:

The initial calculation is correct but the LLM promptly makes it incorrect at the users' request. While the researchers used GPT-3.5 and GPT-4 once again I could not replicate this particular example on GPT-4o, Claude or Llama 3.1 405B.

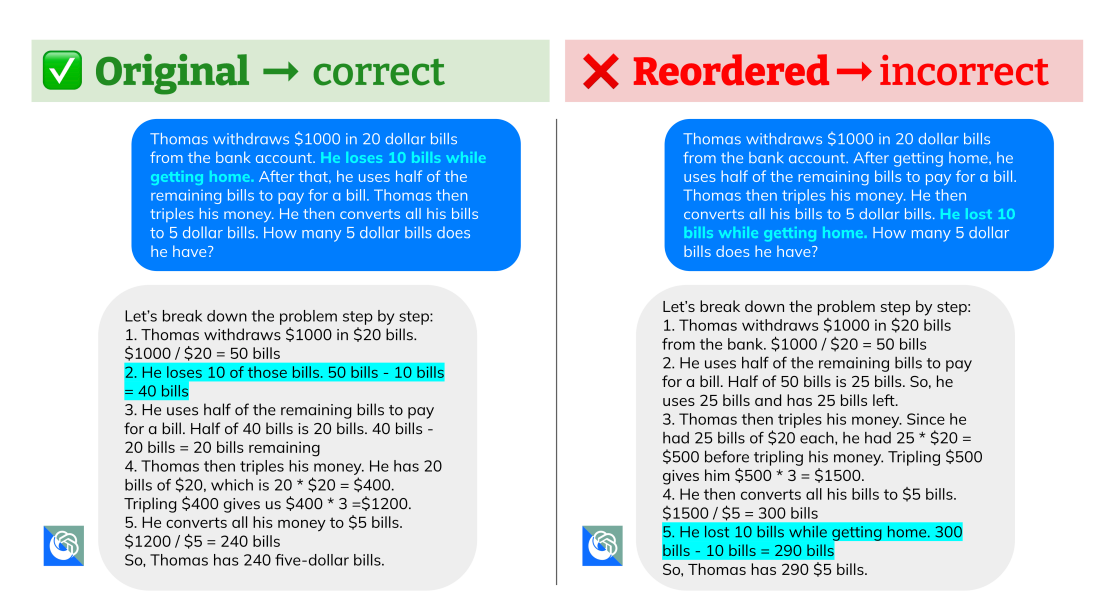

Premise Order Matters in LLM Reasoning

In this work12, researchers investigate the effect that premise order has on LLM reasoning. Specifically, in deductive reasoning, changing the order of premises alone does not change the conclusion. In the example:

1. If 𝐴 then 𝐵.

2. If 𝐵 then 𝐶.

3. 𝐴 is True.

We can derive that 𝐶 is True regardless of the order of these 3 premises. However, for LLMs, it seems like the premise order has a significant impact on reasoning performance and presenting “If A then B” before “If B then C” in the prompt generally achieves a higher accuracy compared to the reversed order. In fact, permuting the premise order can cause a performance drop of over 30%.

Let's consider the following example:

I am very excited about this one because I could actually replicate this problem on both GPT-4o and Claude 3.5 Sonnet.

Conclusion

Chain-of-thought strategies are shown to help improve the performance of LLMs and indeed show some impressive outcomes that resemble a reasoning process - in the right conditions for certain types of problems. It is important to note that all of the results mentioned, both the strategies and the limitations, are from experiments made for a particular dataset using a specific LLM model. A lot of them were tested in maths datasets only for example, and couldn't be generalised to different types of problems. I have tested all examples provided as limitations and couldn't replicate some in the current frontier models (GPT-4o and Claude Sonnet 3.5). This makes it clear that, as LLMs evolve, both strategies and limitations will change. At the moment, it seems like the effectiveness of prompting strategies varies by:

The LLM being used;

The category of the problem;

The complexity of the task;

The quality of the instruction and examples given.

Given that this field is still very experimental, the best advice is to experiment what prompting strategy works best for your particular use case.

Definition of reasoning from the Cambridge Advanced Learner's Dictionary & Thesaurus © Cambridge University Press. https://dictionary.cambridge.org/dictionary/english/reasoning

Brown, T.B., 2020. Language models are few-shot learners. https://arxiv.org/abs/2005.14165

Ling et al. Program Induction by Rationale Generation: Learning to Solve and Explain Algebraic Word Problems. ACL 2017

Cobbe et al. Training Verifiers to Solve Math Word Problems. arXiv:2110.14168 [cs.LG]. 2021

Denny Zhou, Nathanael Schärli, Le Hou, Jason Wei, Nathan Scales, Xuezhi Wang, Dale Schuurmans, Claire Cui, Olivier Bousquet, Quoc Le, Ed Chi. Least-to-Most Prompting Enables Complex Reasoning in Large Language Models. ICLR 2023

Yasunaga, Michihiro, et al. "Large language models as analogical reasoners." arXiv preprint arXiv:2310.01714 (2023)

Wang, Xuezhi, and Denny Zhou. "Chain-of-thought reasoning without prompting." arXiv preprint arXiv:2402.10200 (2024). https://arxiv.org/abs/2402.10200

The first time I tested this was in October 2023 and the o1 model took 47 seconds to arrive at the conclusion.

I should clarify it got the answer sometimes - other times it struggles to find the correct pattern. Test a few times and see what happens.

Freda Shi, Xinyun Chen, Kanishka Misra, Nathan Scales, David Dohan, Ed Chi, Nathanael Schärli, and Denny Zhou. Large Language Models Can Be Easily Distracted by Irrelevant Context. ICML 2023.

Jie Huang, Xinyun Chen, Swaroop Mishra, Huaixiu Steven Zheng, Adams Wei Yu, Xinying Song, Denny Zhou. Large Language Models Cannot Self-Correct Reasoning Yet. ICLR 2024.

Xinyun Chen, Ryan A Chi, Xuezhi Wang, Denny Zhou. Premise Order Matters in Reasoning with Large Language Models. ICML 2024.